VI-Bot

Virtual Immersion for holistic feedback control of semi-autonomous robots

VI-Bot integrates approaches from the areas of robotics, neurosciences and human-machine interaction into an innovative system designed for remote control of robotic systems. A novel exoskeleton with integrated passive safety, using adaptive and behaviour-predicting operator monitoring by means of online EEG analysis, and comprehensive virtual immersion and situational presentation of information and operational options, will convey an “on site-feeling” to the telemanipulating operator.

Project details

The complexity of both mobile robotic systems and their fields of application is continuously increasing, and slowly reaching a point where direct user control or control by state-of-the-art AI is no longer economical. The VI-Bot aims to enable an individual user to control such a complex robotic system. A safe exoskeleton, an adaptive user observation, and a robust multi-modal user interface will be acting together thus giving the user of a semi-autonomous robotic system the impression that he is directly on site. By means of this virtual immersion, remote control of robotic systems will attain the next level and make it possible to virtually dissolve the separation between robot and user and, as a consequence, to bring together man’s cognitive abilities and the robotic system’s robustness. The efficiency of this approach will be evaluated by means of a complex manipulation task.

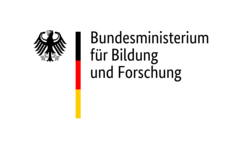

Experiences with current tele-operation environments have demonstrated that both perceptive and motor strains on operators are very high. As a result, operators often do not register warnings of the system in time, and the error rate increases with growing length of operation. This is why the aspired mutual control between operator and VI-Bot interface should be direct, dependable, fast, and extremely coordinated. The use of an adaptive “brain reading” interface (aBRI) still to be developed will enable the VI-Bot interface both to determine whether the operator has noticed the presented warning and to predict the operator’s actions in order to prepare the system accordingly. VI-Bot is the first project of its type which integrates approaches from the areas of robotics, neurosciences, and human-machine interaction into a complete system and thus takes on the challenge of applying as yet mostly theoretical approaches to extremely realistic and application-oriented scenarios. The elements which stand out in particular in this regard are:- A novel exoskeleton, based on intelligent joint modules with integrated passive safety.

- A novel adaptive and behaviour-predicting operator monitoring by means of online EEG analysis.

- Comprehensive virtual immersion and situational presentation of information and operational options.

Videos

VI-Bot: Virtual immersion

Virtual Immersion for holistic feedback control of semi-autonomous robots

VI-Bot: Active Exoskeleton 2010

Teleoperation with the Active Exoskeleton

VI-Bot: Final active exoskeleton

Teleoperation with the final Active Exoskeleton

VI-Bot: Passive exoskeleton

Teleoperation with the Passive Exoskeleton.

Exoskeleton and Tele-operation Control

The project VI-Bot takes into account and evolves the current state of the art of haptic interfaces both from the hardware and software point of view. The haptic device, namely the Exoskeleton and its control system, thus allow a complex interaction with the user, who is enabled perform a teleoperation task with a target robotic system.The Exoskeleton is designed on the base of the human arm anatomy. The device is thought to be wearable, lightweight, and adaptable to different user sizes. Its kinematic structure is configured to constrain the movements of the user as less as possible, while offering an high level of comfort within the overall arm workspace.

The control strategy, based on a combination of classical and bio-inspired techniques, allows a better harmonization with the human arm’s nervous system and additionally implements different safety mechanisms. The development of a general position/force mapping algorithm provides intuitive and effective teleoperation of any complex robotic system of any given morphology.

Direct link to the robotsystems:

Active Exoskeleton

Passive Exoskeleton

Here you can read more information about Exoskeleton and Tele-operation Control.

aBRI

The aBR Interface (aBRI) is part of the VI-Bot system. It is a highly integrated control environment that monitors operator brain signals in real time. This allows anticipation of impending movements and ensures that alert signals have been consciously processed by the operator. It thus is a vital component of the VI-Bot system that interfaces with the exoskeleton and the virtual immersion subsystems, thereby extending the domain of man-machine interaction to the realm of thought processes.

Recently we set up an online BR system that is able to detect certain EEG activity online and hereby allows to predict whether environment alerts have been processes. At this point in the project our work focuses on the anticipation of impending movements and on the adaptiveness of applied methods.

Here you can read more about the aBR Interface.

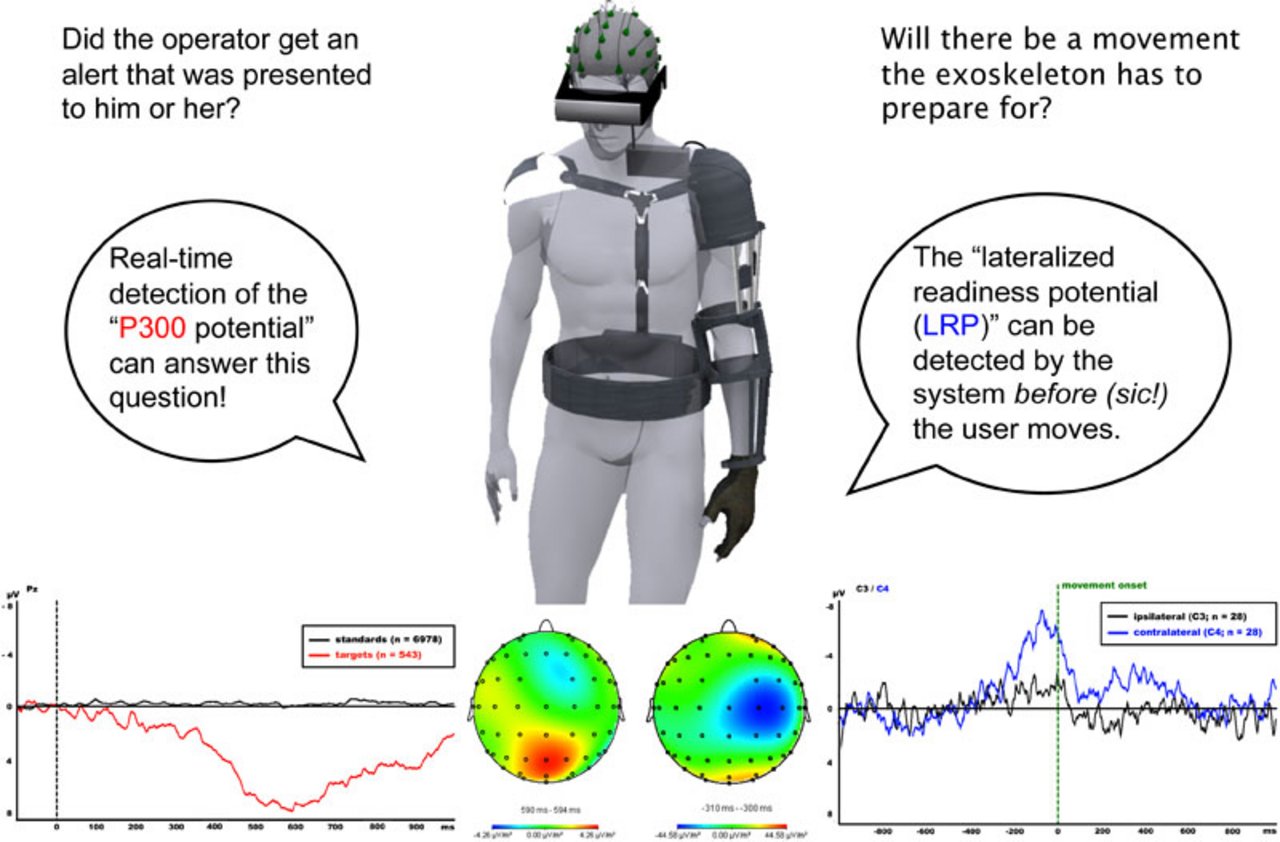

Demonstration Scenario

As demonstration and evaluation scenario a typical tele-operation environment will be developed, which includes several typical elements of remote operation. The target plattform wil be an dexterous Mitsubishi PA-10 industrial robot. This robot wil be tele-operated via the exoskeleton. The robot is mounted in a laboratory and the operator has to fullfill the tasks grabbing a tool, follow a 3D contour with the rool and releasing the tool at the end. The operator will do thisusing the exoskeleton to interact with the robot an the user interface while wearing the VR goggles displaying a camera feed and a 3D modell of the robot and he will be equipped with the EEG system connected to the aBRI system to monitor her reactions. During the complete event the operator has to watch out for optical warnings displayed in the VR goggles and has to confirm them.

This scenario uses all core components of VI-Bot. The operator controls the robot inuitive via the exoskeleton and has a in-situ feeling via the VR goggles. She is monitored by the aBRI system and therefore it can be checked wether she perceived warning or ignored them on purpose because she is in a critical tele-operation phase.Additionally the haptic feedback of the exoskeleton is supported by the aBRO allowing for smoother and more natural haptic feedback. All VI-Bot components can be switched of individually, which allows for a thoroughly evaluation of the systems performance by comparing it to the non-enhanced state.

The demonstration scenario will be implemented in October 2010 and test and evalution done in the last quarter of the project.