DeeperSense

Deep-Learning for Multimodal Sensor Fusion

The main objective of DeeperSense is to significantly improve the capabilities for environment perception of service robots to improve their performance and reliability, achieve new functionality, and open up new applications for robotics. DeeperSense adopts a novel approach of using Artificial Intelligence and data-driven Machine Learning / DeepLearning to combine the capabilities of non-visual and visual sensors with the objective to improve their joint capability of environment perception beyond the capabilities of the individual sensors. As one of the most challenging application areas for robot operation and environment perception, DeeperSense chooses underwater robotics as a domain to demonstrate and verify this approach. The project implements DeepLearning solutions for three use cases that were selected for their societal relevance and are driven by concrete end-user and market needs. During the project, comprehensive training data are generated. The algorithms are trained on these data and verified both in the lab and in extensive field trials. The trained algorithms are optimized to run on the on-board hardware of underwater vehicles, thus enabling real-time execution in support of the autonomous robot behaviour. Both the algorithms and the data will be made publicly available through online repositories embedded in European research infrastructures. The DeeperSense consortium consists of renowned experts in robotics and marine robotics, artificial intelligence, and underwater sensing. The research and technology partners are complemented by end-users from the three use case application areas. Among others, the dissemination strategy of DeeperSense has the objective to bridge the gap between the European robotics and AI communities and thus strengthen European science and technology.

| Duration: | 01.01.2021 till 31.12.2023 |

| Donee: | German Research Center for Artificial Intelligence GmbH |

| Sponsor: | European Union |

| Grant number: | H2020-ICT-2020-2 ICT-47-2020 Project Number: 101016958 |

| Website: | https://www.deepersense.eu |

| Partner: |

Universitat de Girona University of Haifa Kraken Robotik GmbH Bundesanstalt Technisches Hilfswerk Israel Nature and National Parks Protection Authority Tecno Ambiente SL |

| Application Field: |

Underwater Robotics

SAR- & Security Robotics |

| Related Projects: |

TRIPLE-nanoAUV 1

Localisation and perception of a miniaturized autonomous underwater vehicle for the exploration of subglacial lakes

(09.2020-

06.2023)

Mare-IT

Information Technology for Maritime Applications

(08.2018-

11.2021)

REMARO

Reliable AI for Marine Robotics

(12.2020-

11.2024)

|

| Related Robots: |

DAGON

Subsea-resident AUV

|

Project details

The applicability of autonomous robots in real-world applications (e.g. for inspection and maintenance) relies heavily on their sensing capabilities. Only if a robot is able to perceive its environment even under harsh environmental conditions (dirty, dark, turbid, confined), it is able to operate reliably and without putting the mission’s success at risk or even endangering human operators in the vicinity.

Visual sensing (e.g. cameras) is one of the most common perception techniques used in robotics tasks ranging from autonomous navigation and manipulation to mapping and object detection. But although cameras and other visual sensors are in principle able to provide very dense and detailed information about the robot’s environment, their performance depends highly on the ambient lighting and visibility conditions and can drastically degrade if such conditions are not optimal (e.g. in dark or hazy environments). But if decision-making and control algorithms strongly rely on data from these sensors, the negative impact on the real-world usability of an autonomous robot can be significant. It is common phenomenon that autonomous robots that work perfectly fine in the lab (i.e. under controlled lighting and visibility conditions) do fail miserably once they are exposed to the real-world (i.e. dynamic, unpredictable, and sub-optimal lighting and visibility conditions).

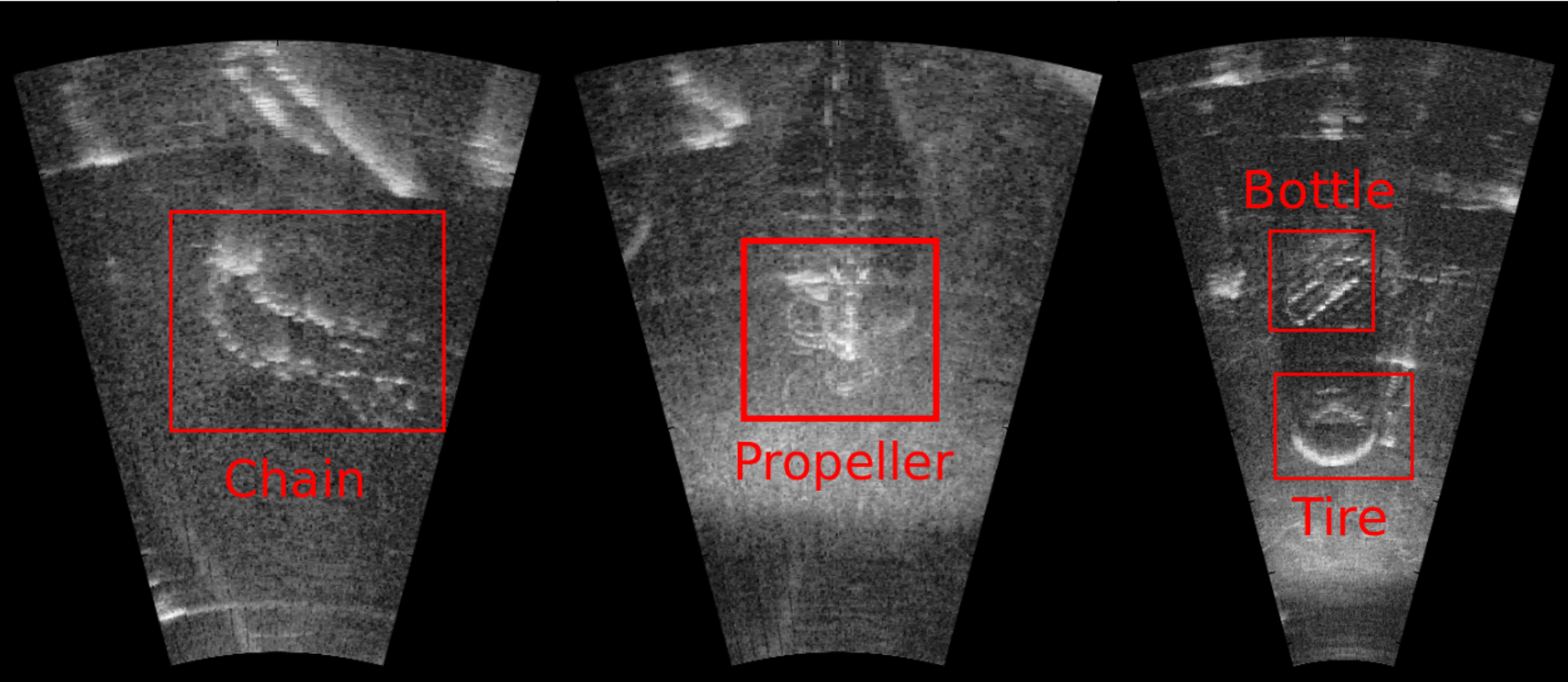

On the other hand, alternative non-visual sensing techniques have emerged, inspired by the sensing capabilities of some animal species. Examples are acoustics, magnetoreception or thermoception. Acoustic sensing, for example, is not affected by bad visibility and poor lighting conditions and can be used to provide range measurements or even image-like data. Acoustic sensors (e.g. ultrasound) are used in terrestrial applications, but in the form of SONAR (SOund Navigation and Ranging) particularly in marine (underwater) applications, where factors like color absorption, optical distortion, mirroring effects, bad lighting conditions and most importantly, low visibility in turbid waters significantly limit the capabilities of visual sensing. On the other hand, the main shortcomings of acoustic and of other non-visual sensing methods, is their low spatial resolution compared to visual sensors and the fact that, particularly in dynamic environments, they typically suffer from a low signal-to-noise ratio. Figure 1-1 shows three typical sonar images with objects that are hard to distinguish and classify even by a trained human eye. In general, these shortcomings make it difficult to use non-visual sensing methods for robotic perception, or even for human operators of remote controlled robots.

However, it is possible to mitigate the shortcomings of non-visual sensing by enabling such sensors to learn latent features from other sensing modalities (including visual) and thus transfer knowledge between sensors, both about high-level features and low-level measurements. With the advent of data-driven AI techniques such as deep learning for image recognition and natural language processing, powerful tools are available to extract information embedded in (sensor) data by means of directly inferring input-output relations.

The marine and underwater application domain was chosen as a suitable field for the demonstration of the DeeperSense concept because it represents the prototype of an extremely harsh and difficult (from a sensing point of view) environment. The focus in the project is on visual and acoustic sensors. However, the general concept of inter-sensoric learning is applicable to all robotic application domains and many sensor modalities.

The DeeperSense concept will be demonstrated in three use cases. These use cases were selected based both on their importance for pushing the state-of-the-art in robotics and for their societal relevance.

USE CASE I: Hybrid AUV for Diver Safety Monitoring:

In many underwater applications related to inspection & maintenance, emergency response and other underwater operations, professional divers still play an important role. While even the regular professional/industry diving is inherently dangerous and needs to be well planned and monitored, safety aspects are even more important for professional diving related to civil protection and emergency response. Here, compared to standard industry diving, only a very limited time for planning may be available while the divers have to perform complex tasks such as welding, cleaning, and debris removal.

The divers typically have to perform these activities in a complex environment with many (artificial) objects and obstacles, such as ports, piers, industrial basins, and channels. This makes it difficult to navigate and self-localize. In addition, even if visibility is good at the beginning of the dive, almost all of the activities mentioned above cause the turbidity of the water to increase, which leads regularly to white-out situations. Figure 1-2 shows some tasks that cause turbidity in the surrounding of the diver: the use of a pneumatic drill (A), drilling into a piece of wood (B), or pumping operations (C).

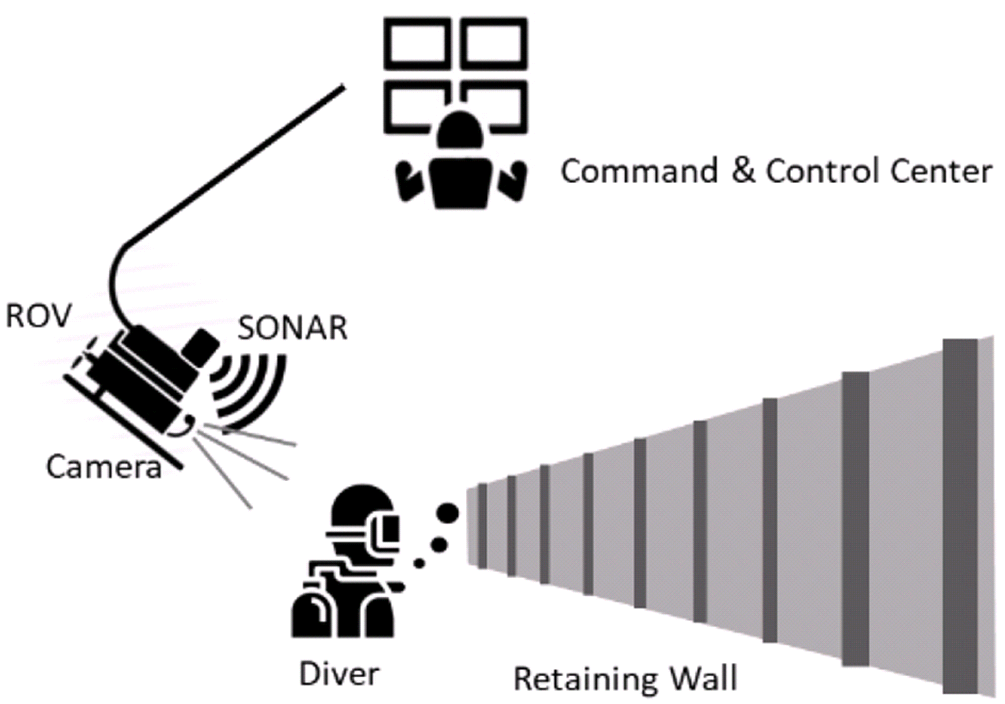

In such white-out situations, as in standard working situations, it is essential for the safety of the diver to receive guidance and instructions from an operator in the mission control center who has an overview of the situation and knows where the diver is, what he/she is doing, and where he/she should move to next. State-of-practice diving equipment includes a first-person viewpoint camera directly on the helmet. In addition, Remotely Operated Vehicles (ROVs) are used sometimes to observe and instruct the diver from a third person perspective (Figure 1-3). Under perfect visibility conditions this may suffice, but to monitor heavy underwater work (creating significant turbidity), both the suit camera and the ROV camera are of limited use.

In DeeperSense, we will equip a hybrid AUV with a sonar sensor that has learned from a HD camera how to reliably interpret low-resolution sonar signals of a diver and transform them into a visual view. This visual image of the diver will then be used by the human operator in the control center to evaluate the actions, state and position of the diver as well as by the follow-me control algorithm of the hybrid AUV to keep a constant distance to the diver (Figure 3). As this application relies only on the acoustic SONAR data, it will not be affected by low visibility, darkness or other optical disturbances.

The use-case will be driven by end-user THW and demonstrated with real divers in a training facility under continuously decreasing visibility conditions.

USE CASE II: Surverying and Monitoring Complex Benthic Environments, e.g., Coral Reefs:

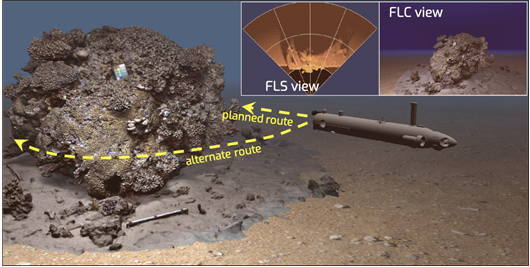

To explore complex underwater structures (such as a coral reef) with an Autonomous Underwater Vehicle (AUV) requires advanced environment perception and navigation capabilities, in particular related to Obstacle Avoidance (OA). OA for AUVs has gained importance as the use of AUVs is increasing rapidly in a wide range of scientific, commercial and military applications, such as archaeological surveys, ecological studies and monitoring, wreck exploration, subsea equipment inspection and underwater mines detection.

Both visual sensors (cameras) and acoustic sensors (sonar) can be used to detect obstacles undewater. However, acoustic sensors, which are most commonly used for OA in underwater applications, are not effective at close range (< 5m) due to interference noise and multi-path phenomena. In addition, acoustic sensors can provide only 2D information on the surroundings, rather than a full 3D structure, with limited resolution and details. Acoustic OA is therefore almost useless in complex underwater environments that require delicate maneuvering, such as artificial structures, wrecks, canyons or reefs, and standard OA schemes are very basic, usually only instructing the AUV to ascend until the obstacle disappears from the sensor.

Optical OA, on the other hand, is still in its infancy for underwater applications, with only few recent academic attempts in clear water and simple scenes, but no commercial products. This is mainly due to poor visibility and contrast in underwater optical images that result in a very limited imaging range. Nevertheless, optical OA would be a better solution for close-range OA and navigation. In addition, cameras are much cheaper and smaller than sonar sensors. To use cameras instead of sonars would support more efficient and cost-effective vehicles, eventually facilitating development and deployment of swarms of vehicles at reasonable costs.

In DeeperSense we will develop a novel visual OA system to facilitate delicate robotic underwater missions such as surveys of complex underwater environments. The visual OA system will be able to overcome the normal limits of visual sensors by learning features from a Forward Looking Sonar (FLS).

The system will be integrated with an AUV and used to explore a coral reef in the Red Sea. The new OA system will enable the AUV to fly right through the coral reef instead of having to stay well above the reef, which is the standard procedure currently.

The exploration of a coral reef was chosen as use case because coral reefs are among the most important marine habitats, occupying only 0.1% of the area of the ocean but supporting 25% of all marine species on the planet. Most reef-building corals are colonial organisms of the phylum Cnidaria. Their growth creates epic structures that can be seen from space. These structures not only harbor some of the world’s most diverse ecosystems, but also provide valuable services and goods such as shoreline protection, habitat maintenance, seafood products, recreation, and tourism. Latest estimates suggest coral reefs provide close to US$30 billion each year in goods and services, including coral reef related tourism. Furthermore, due to their immobility, corals have developed an arsenal of chemical substances that hold great medicinal potential. Coral reef ecosystems have suffered massive declines over the past decades, resulting in a marine environmental crisis. Today, coral reefs face severe threats as a result of climate change and anthropogenic-related stress. Mapping of coral reefs provides important information about a number of reef characteristics, such as overall structure and morphology, abundance and distribution of living coral, and distribution and types of sediment.

Thus, affordable AUVs that are able to improve the environmental monitoring of this fragile ecosystem are very much in the interest of the end user, the Israel Nature and Parks Authority (INPA).

USE CASE III: Sub-Sea multisensor bottom mapping and interpretation for geophysics:

High-resolution maps of the seafloor, with the topographical features, bottom type, habitats of benthonic life forms, and other features identified and classified correctly are the basis for the scientific exploration, environmental monitoring, and economic exploration of the oceans.

Today surveys to create such maps are typically conducted from sea-going vessels, using side-scan sonars and multibeam echosounders. Both the depth-measurements and the acoustic return (backscatter) of the sonars are taken into account for the interpretation, which is done manually and off-line, by a group of experts in marine geology or biology. To assist the interpretation, video samples are taken to enable a visual check of the properties of the bottom and thus have ground-truth for the interpretation. Due to the limited range and limited operational conditions of the optical cameras underwater, such video samples are acquired over small areas only, and are therefore lacking representativeness over the much larger areas typically covered by the acoustic sensors.

There is a strong need to be able to do these types of surveys using autonomous robotic platforms (AUVs) where the sensing and mission planning are intertwined, in order to be able to adapt the survey pattern to the terrain encountered on-the-fly. Allowing for AUVs to perform missions of large area acoustic mapping with integrated benthic classification will lead to significant cost savings in terms of survey operations. It will also contribute to a better characterization of the benthos, and this for a more informed decision making in terms of planning for intervention or for monitoring human impact.

In DeeperSense, we will use advanced machine learning techniques to enable multisensor fusion of different sources, mainly from side scan sonars, but also from multibeam echosounders. Optical imagery will be used as another layer for training, classification and benchmarking with the overall objective of allowing, by the end of the project, to perform accurate sea-floor characterization with an AUV and without the need for optical imagery.

The solution will be validated and demonstrated on one or several of the many survey missions that the end-user Tecnoambiente (TA) conducts each year. Developed methods will also be demonstrated with data acquired by the AUVs available within the consortium.

Videos

DeeperSense: Learning for Multimodal Sensor Fusion

The main objective of DeeperSense is to enahance underwater sensing capabilities by applying DeepLearning methods to fuse data from sensors that inhibit different sensing modalities. DFKI's role in this project is to train methods that learn an association between visual sensors such as RGB cameras and laser scanners, and acoustic time-of-flight sensors like forward-looking-sonars (FLS). The goal is to then deploy the trained models to generate visual-like images using only acoustic data to aid the monitoring of divers under bad visibility conditions.

DeeperSense: Meet the Consortium

Project Coordinator Dr. Thomas Vögele, from the German Research Center for Artificial Intelligence, gives a brief introduction to the DeeperSense project and the trials in the Maritime Exploration Hall that were conducted together with the Bundesanstalt Technisches Hilfswerk (THW) and Kraken Robotik GmbH.