ActGPT

Adaptive robot ConTrol with Generative Pre-trained Transformers

The ActGPT project aims to combine the predictive capabilities of large language models and large multimodal AI models with the physical capabilities of complex dynamic robots. Such a link between AI and the physical capabilities of dynamic robots, which typically require precise system models, opens several opportunities, such as reducing the dependence on expert knowledge and manual engineering in the development of robot control strategies, making highly dynamic robotic systems more generally applicable, and improving their autonomy in dynamically changing environments.

| Duration: | 01.04.2025 till 28.02.2028 |

| Donee: | German Research Center for Artificial Intelligence GmbH |

| Sponsor: | Federal Ministry of Education and Research |

| Grant number: | 01IW25002 |

| Application Field: |

Logistics, Production and Consumer

Assistance- and Rehabilitation Systems |

| Related Projects: |

M-Rock

Human-Machine Interaction Modeling for Continuous Improvement of Robot Behavior

(08.2021-

07.2024)

VeryHuman

Learning and Verifying Complex Behaviours for Humanoid Robots

(06.2020-

05.2024)

AAPLE

Expanding the Action-Affordance Envelope for Planetary Exploration using Dynamics Legged Robots

(03.2023-

02.2025)

|

| Related Robots: |

RH5 Manus

Humanoid robot as an assistance system in a human-optimized environment

Quad B12

Quadrupedal Research Platform

|

| Related Software: |

ARC-OPT

Adaptive Robot Control using Optimization

HyRoDyn

Hybrid Robot Dynamics

|

Project details

Currently, we are seeing an explosion in AI, primarily led by the advances in reinforcement learning (RL) methods as well as transformer-based neural networks. For example, large language models (LLMs) as used by ChatGPT have shown impressive results in general-purpose language generation. However, AI should not only satisfy communication intelligence, but also intelligence in terms of interacting with the physical world, as required, e.g., by dynamic robots like humanoids. Yet, current LLMs and other large AI models hardly involve physical interaction with the environment.

Conversely, recent advancements in robotics have enabled a new generation of highly dynamic robots which have demonstrated impressive feats of dynamic or even athletic behavior. Most notable of them is the Atlas humanoid robot built by Boston Dynamics which can walk and run naturally, perform 360 degree jumps and back flips, and dance with an agility close to a human. Other examples include ostrich inspired humanoid robot platform Digit (Agility Robotics) or the H1 humanoid from Unitree.

While all these systems achieve impressive results in individual, precisely defined tasks, the link between their motion capabilities, which are mostly based on advanced mechanics and modern control theory, and artificial intelligence is usually missing. Thus, ActGPT pursues the following main objective:

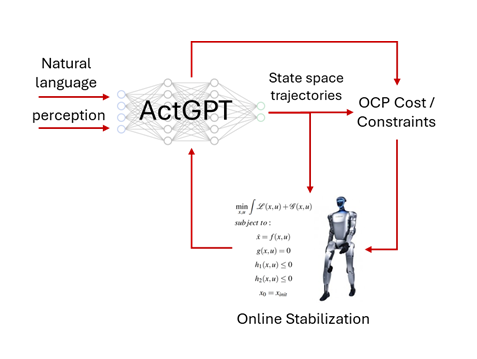

To link the predictive capabilities that can be observed in large language models and large multimodal models to the physical capabilities of complex dynamic robots

To achieve the main objective, we pursue three sub-goals in ActGPT:

- To enable large AI models to generate dynamic robot motions using natural language and images as input. To generate dynamically consistent robot trajectories, it is necessary that the AI model understands the dynamics of the robot being operated. One way to achieve this is to insert physics-informed layers into the transformer network, which can be trained using RL. The link between LLMs and RL has already been investigated, and we plan to extend the existing research to Large Multimodal Models (LMMs) and highly dynamic robots.

- To enable large AI models to synthesize optimal control (OC) problems using natural language and images as input. OC provides a semantically rich description of dynamic robot tasks in terms of costs and constraints. As intermediate interface, we define a simple domain-specific language (DSL) to describe the OC problem. One way to generate programs within this DSL is to combine LLMs with NN-guided program search, an approach that can lead to unexpected and general solutions. The generated OC program can then be used to produce dynamic robot motions while ensuring physical consistency.

- To improve robustness and stability of large AI models, which are known to deliver unreliable and error-prone outputs at times. This will be achieved by integrating constraints from model-based control at training stage, for example when training individual layers of a transformer network in a physics simulation, and with the help of online behavior stabilization methods. Both approaches can provide the physical grounding that the AI models lack.

To evaluate the methods developed in ActGPT, we will conduct experiments on increasingly complex, real systems and with different sensory-motor setups, such as the double pendulum, a quadruped and a bipedal robot. The final goal is to control a humanoid robot using natural language input, linking high-level commands to dynamic robot motions.