VeryHuman

Learning and Verifying Complex Behaviours for Humanoid Robots

The validation of systems based on deep learning for use in safety-critical applications proves to be inherently difficult, since their sub symbolic mode of operation does not provide adequate levels of abstraction for representation and proof of correctness. The VeryHuman project aims to synthesize such levels of abstraction by observing and analysing the behaviour of upright walking of a two-legged humanoid robot. The theory to be developed is the starting point for the definition of an appropriate reward function to optimally control the movements of the humanoid by means of enhanced learning, as well as for verifiable abstraction of the corresponding kinematic models, which can be used to validate the behaviour of the robot more easily.

Project details

- A robust hardware of the robot along with an accurate simulation of the system is required. For example, the robot can be subjected to a large amount of holonomic constraints including internal closed loops and external contacts which pose challenges to the accuracy of the simulation.

- Second, control algorithms of this kind can be hard to implement due to lack of knowledge of reward and constraints. As an example, consider the upright walking movement for a two-legged humanoid robot. It is not immediately clear how one can specify the task of “upright walking”. We might try to relate different body parts (head above shoulders, shoulders above waist, waist above legs), use physical stability criteria (centre of pressure, zero moment point etc), but do these really specify walking and what are non-trivial properties? This leads to the non-trivial task of defining a suitable reward function for (deep) reinforcement learning approaches or cost function for optimal control approaches along with constraints.

- How can we formulate and prove properties of a complex humanoid robot, and

- how can we efficiently combine reinforcement learning and optimal control-based approaches, and

- how can we make use of symbolic properties to derive a reward function in a deep reinforcement learning approach or optimal control approach for complex use cases such as humanoid walking.

Videos

RicMonk: A Three-Link Brachiation Robot with Passive Grippers for Energy-Efficient Brachiation

This paper presents the design, analysis, and performance evaluation of RicMonk, a novel three-link brachiation robot equipped with passive hook-shaped grippers. Brachiation, an agile and energy-efficient mode of locomotion observed in primates, has inspired the development of RicMonk to explore versatile locomotion and maneuvers on ladder-like structures. The robot's anatomical resemblance to gibbons and the integration of a tail mechanism for energy injection contribute to its unique capabilities. The paper discusses the use of the Direct Collocation methodology for optimizing trajectories for the robot's dynamic behaviors and stabilization of these trajectories using a Time-varying Linear Quadratic Regulator. With RicMonk we demonstrate bidirectional brachiation, and provide comparative analysis with its predecessor, AcroMonk - a two-link brachiation robot, to demonstrate that the presence of a passive tail helps improve energy efficiency. The system design, controllers, and software implementation are publicly available on GitHub.

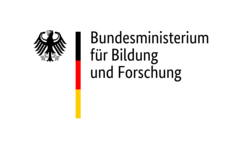

M-RoCK+VeryHuman: Whole-Body Control of Series-Parallel Hybrid Robots

The Video illustrates the results of the paper Dennis Mronga, Shivesh Kumar, Frank Kirchner: "Whole-Body Control of Series-Parallel Hybrid Robots", Accepted for Publication: IEEE International Conference on Robotics and Automation (ICRA), 23.5.-27.5.2022, Philadelphia, 2022.

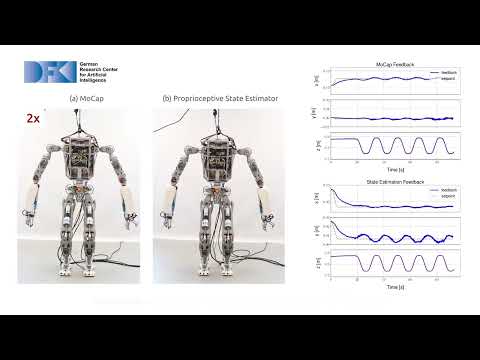

RH5: Motion Capture State Feedback for Real-Time Control of a Humanoid Robot

The Video illustrates the results of the paper Mihaela Popescu, Dennis Mronga, Ivan Bergonzani, Shivesh Kumar, Frank Kirchner: "Experimental Investigations into Using Motion Capture State Feedback for Real-Time Control of a Humanoid Robot", Accepted for Publication: MDPI Sensors Journal, Special Issue "Advanced Sensors Technologies Applied in Mobile Robot", 2022.

RH5 Manus: Background of robot dance generation based on music analysis driven trajectory optimization

RH5 Manus: Robot Dance Generation based on Music Analysis Driven Trajectory Optimization

Musical dancing is an ubiquitous phenomenon in human society. Providing robots the ability to dance has the potential to make the human robot co-existence more acceptable. Hence, dancing robots have generated a considerable research interest in the recent years. In this paper, we present a novel formalization of robot dancing as planning and control of optimally timed actions based on beat timings and additional features extracted from the music. We showcase the use of this formulation in three different variations: with input of a human expert choreography, imitation of a predefined choreography, and automated generation of a novel choreography. Our method has been validated on four different musical pieces, both in simulation and on a real robot, using the upper-body humanoid robot RH5 Manus.

RH5 Manus: Introduction of a Powerful Humanoid Upper Body Design for Dynamic Movements

Recent studies suggest that a stiff structure along with an optimal mass distribution are key features to perform dynamic movements, and parallel designs provide these characteristics to a robot. This work presents the new upper-body design of the humanoid robot RH5 named RH5 Manus, with series-parallel hybrid design. The new design choices allow us to perform dynamic motions including tasks that involve a payload of 4 kg in each hand,

and fast boxing motions. The parallel kinematics combined with an overall serial chain of the robot provides us with high force production along with a larger range of motion and low peripheral inertia. The robot is equipped with force-torque sensors, stereo camera, laser scanners, high-resolution encoders etc that provide interaction with operators and environment. We generate several diverse dynamic motions using trajectory optimization, and successfully execute them on the robot with accurate trajectory and velocity tracking, while respecting joint rotation, velocity, and torque limits.

RH5 Manus: Humanoid assistance robot for future space missions

The humanoid robot "RH5 Manus" was developed as part of the "TransFIT" project as an assistance robot that can be used in the direct human environment, for example on a future moon station. The aim was to equip the robot with the necessary capabilities to perform complex assembly work autonomously, as well as in cooperation with astronauts and teleoperated. Another focus of the project was on the transfer of the developed technologies to industrial manufacturing and production. The video shows the mechanical assembly and the commissioning of the robot.

RH5: Design, Analysis and Control of the Series-Parallel Hybrid RH5 Humanoid Robot

This paper presents a novel series-parallel hybrid humanoid called RH5 which is 2 m tall and weighs only 62.5 kg capable of performing heavy-duty dynamic tasks with 5 kg payloads in each hand. The analysis and control of this humanoid is performed with whole-body trajectory optimization technique based on differential dynamic programming (DDP). Additionally, we present an improved contact stability soft-constrained DDP algorithm which is able to generate physically consistent walking trajectories for the humanoid that can be tracked via a simple PD position control in a physics simulator. Finally, we showcase preliminary experimental results on the RH5 humanoid robot.

Torque-limited simple pendulum: A toolkit for getting started with underactuated robotics

This project describes the hardware (Computer-aided design (CAD) models, Bill Of Materials (BOM), etc.) required to build a physical pendulum system and provides the software (Unified Robot Description Format (URDF) models, simulation and controller) to control it. It provides a setup for studying established and novel control methods on a simple torque-limited pendulum, and targets students and beginners of robotic control. In this video we will cover mechanical and electrical setup of the test bed, introduce offline trajectory optimization methods and showcase model-based as well as data-driven controllers. The entire hardware and software description is open-source available.