MaLeBeCo

Machine Learning Application Benchmarking on COTS Inference Processors

The objective of the MaLeBeCo project is to build a test-bed allowing the comparison and benchmarking of machine learning applications for low-, mid- and high-performance architectures. This is of particular interest, in order to be able to cover the wide application area which is given by the different mission scenarios and use-cases. These use-cases include among other: the provision of pre-processed smart payload data, guidance navigation and control (GNC) for satellites as well as robots, on-board AI for an increased level of autonomy, intelligent data exploration algorithms, as well as AI in operations on ground or in orbit.

| Duration: | 01.10.2021 till 30.09.2022 |

| Donee: | German Research Center for Artificial Intelligence GmbH |

| Sponsor: | ESA |

| Grant number: | ESA Contract No. 4000135724/21/NL/AS |

| Partner: | Airbus Defence and Space |

| Application Field: | Space Robotics |

| Related Projects: |

SpaceClimber

A Semi-Autonomous Free-Climbing Robot for the Exploration of Crater Walls and Bottoms

(09.2006-

09.2009)

iStruct

Intelligent Structures for Mobile Robots

(05.2010-

08.2013)

LIMES

Learning Intelligent Motions for Kinematically Complex Robots for Exploration in Space

(05.2012-

04.2016)

TransTerrA

Semi-autonomous cooperative exploration of planetary surfaces including the installation of a logistic chain as well as consideration of the terrestrial applicability of individual aspects

(05.2013-

12.2017)

RIMRES

Reconfigurable Integrated Multi Robot Exploration System

(09.2009-

12.2012)

|

| Related Robots: |

MLAD

Machine Learning Accelerator Demonstrator

|

Project details

The main objectives of the activity cover the following aspects:

Generation of a reusable and open reference dataset:

For this activity several different use-cases have been proposed to be selected for the benchmarking and shall be made publicly available during the project:1) Pose-estimation,

2) Anomaly-detection and

3) Image classification.

Evaluation and selection of suitable target platforms:

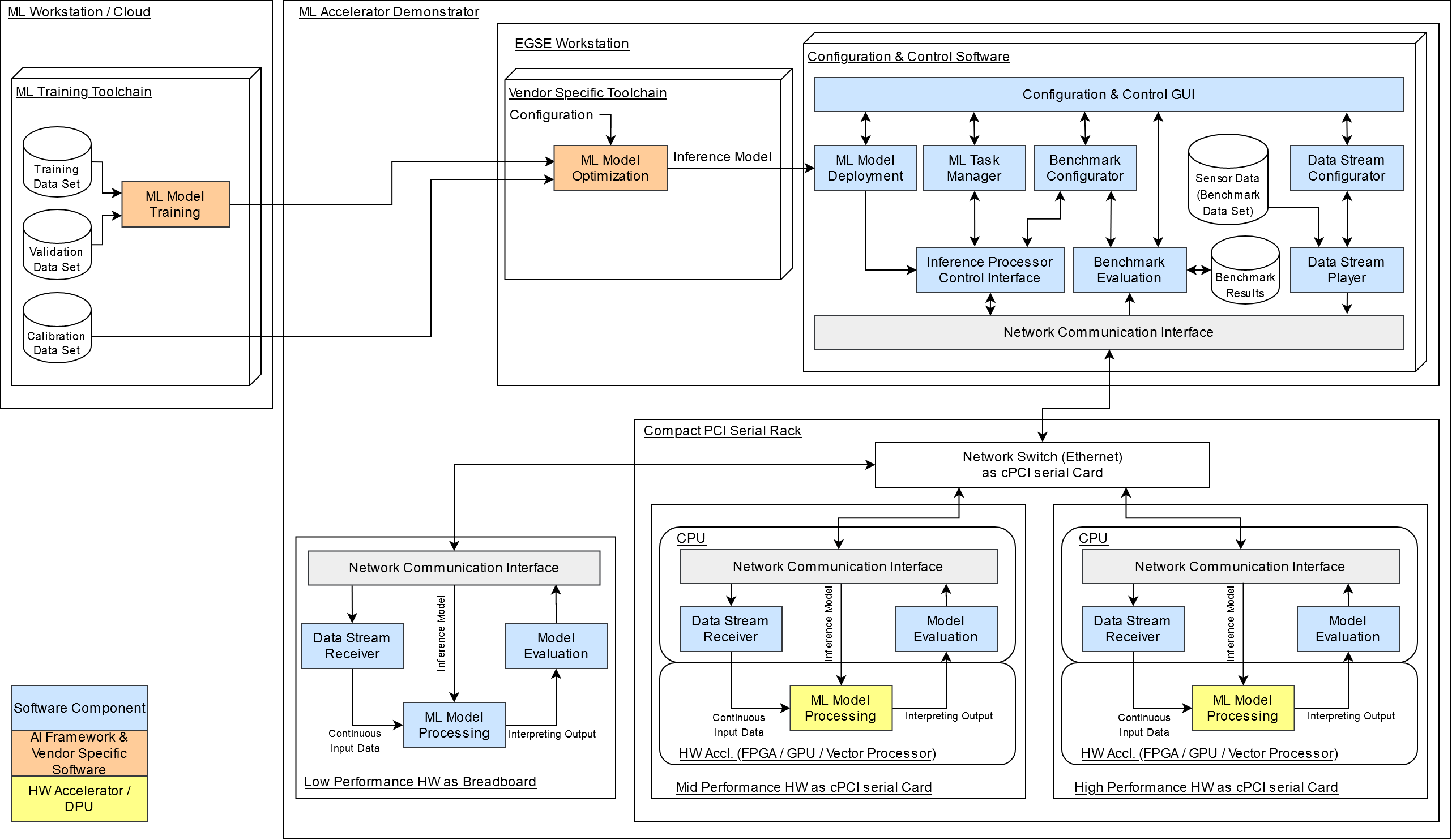

Huge efforts are made to build radiation tolerant and/or hard processor units as well as to evaluate, alter and qualify specific COTS processors. For the activity three different processor units are proposed, each covering a different performance area: low-performance, mid-performance and high-performance. All pre-selected processor units have a direct relation to be potentially applicable in space missions.Development of ML algorithms representative for tasks required for future space missions:

It is understood that the benchmarking shall contain representative applications which are typical for future space-applications of AI techniques. Therefore, it is planned to start with a detailed analysis of therequirements from a various set of space-applications which cover high-performant and mission-critical on-board applications to low-performance and less mission-critical.

Development of an efficient and reliable method of ML task management on the target platform:

It is planned to make developed inference models interchangeable on the target platforms. Furthermore, it is proposed to deploy developed models for future use cases on the target platforms. This deployment will be controlled via the EGSE GUI in to ensure ease of use and reliable operation of the entire task management and configuration process.

Development and execution of a benchmarking solution for HW suitable target applications:

It is foreseen to specify a maximum workload for each benchmark on all selected AI accelerator H/W devices. The use-cases will be defined that different amounts of operations have to be performed. There are two type of metrics to be considered. The metric from computational point of view and the performance metrics such as accuracy and precision For each benchmark, at least one metric will be specified Those post-processing functions will be part of the benchmark metric definition.Preparation of a ML accelerator demonstrator:

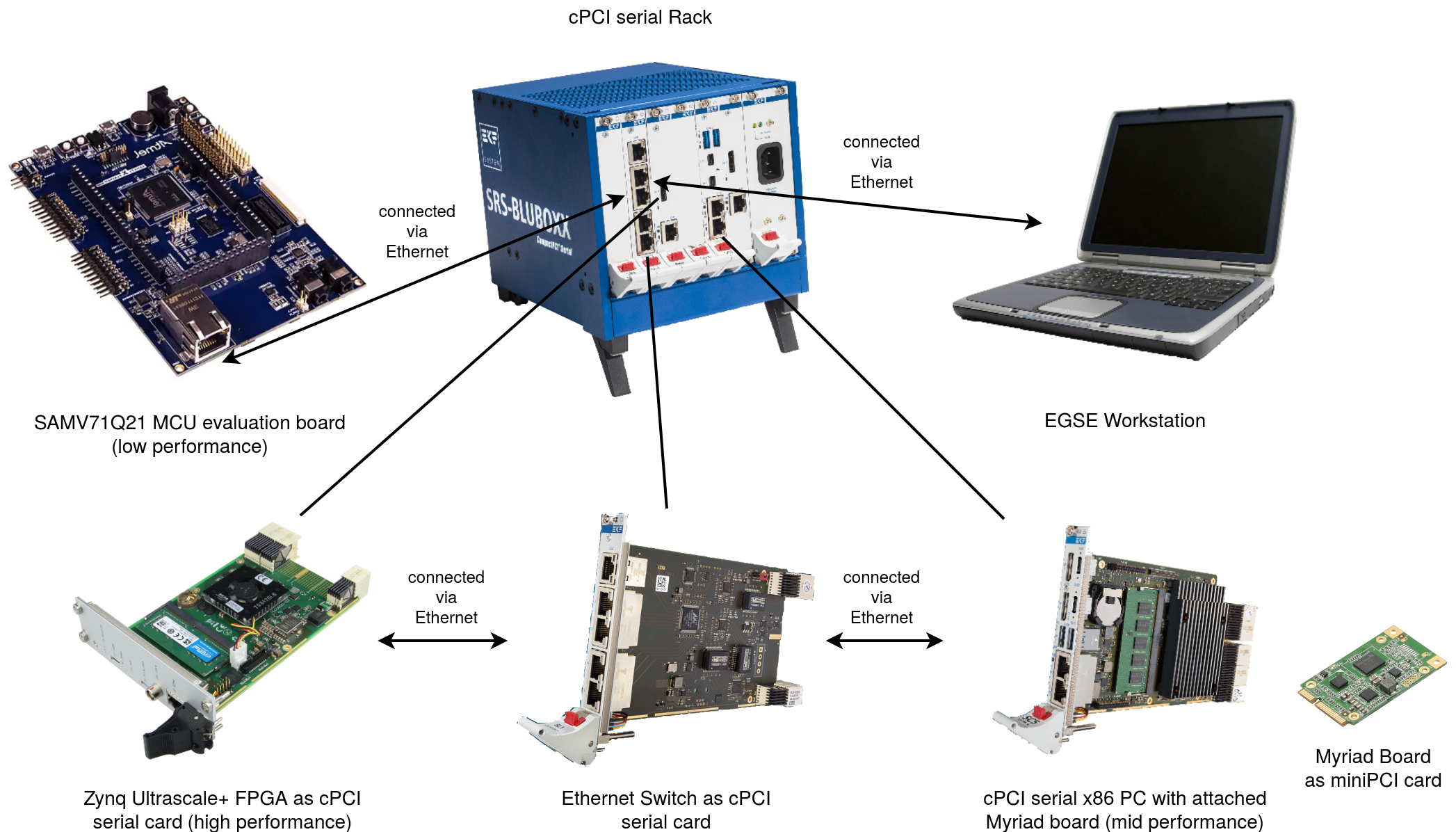

To perform the benchmark, a ML accelerator demonstrator will be deployed. It comprises all preselected hardware platforms as well as a central EGSE workstation. The demonstrator will be designed in a generic manner, allowing to add further hardware platforms and/or use-cases for the benchmark in the future.

Evaluation of results and provision of standard inference workflow:

The ML accelerator demonstrator serves as a first initialization and demonstration of a hardware and ML benchmark for space applications. In order to add further use-cases and hardware platforms in the future, a standardized inference workflow will be evaluated and described as a result of the activity.