Video archive

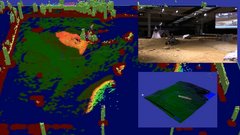

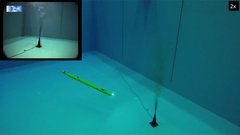

DeeperSense: Learning for Multimodal Sensor Fusion

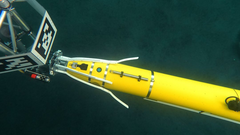

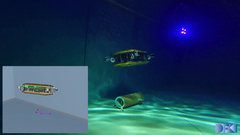

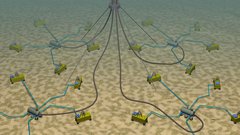

The main objective of DeeperSense is to enahance underwater sensing capabilities by applying DeepLearning methods to fuse data from sensors that inhibit different sensing modalities. DFKI's role in this project is to train methods that learn an association between visual sensors such as RGB cameras and laser scanners, and acoustic time-of-flight sensors like forward-looking-sonars (FLS). The goal is to then deploy the trained models to generate visual-like images using only acoustic data to aid the monitoring of divers under bad visibility conditions.